I. Introduction – Welcome to the Ethical Twilight Zone

Picture this: You’re sipping your coffee, scrolling through Instagram, and boom—your favorite movie star pops up in an ad, passionately endorsing a new protein shake. You smile, nod, and maybe even click “add to cart.” Only later do you find out… it was a deepfake.

Table of Contents

ToggleWelcome to the ethical twilight zone of digital marketing—where what you see is no longer what you get, and your favorite celebrity might be a cluster of GANs and motion capture instead of flesh and blood.

Thanks to deepfakes, AI avatars, and eerily realistic synthetic voices, marketers now have a powerful (and controversial) new toolkit. From resurrecting legends like Audrey Hepburn for chocolate commercials to deploying AI influencers who never eat, sleep, or age (hello, Lil Miquela), the line between creativity and deception has never been blurrier.

This matters. Why? Because we’re already seeing cracks in consumer trust. If people can’t tell what’s real, they might stop trusting anything at all—especially your brand.

In this blog, we’ll deep-dive (pun intended) into digital marketing ethics in this bizarre, AI-enhanced landscape. We’ll untangle issues like deepfakes in advertising, the ethical use of AI in marketing, AI influencer ethics, and where consumer trust fits into all this madness. We’ll also look at regulations, sketchy brand practices, and yes—some folks doing it right.

Ready to pull back the curtain on the age of synthetic persuasion? Grab a snack. This one’s juicy.

II. What Are Deepfakes and AI Avatars?

Let’s start with the basics: what even are deepfakes and AI avatars?

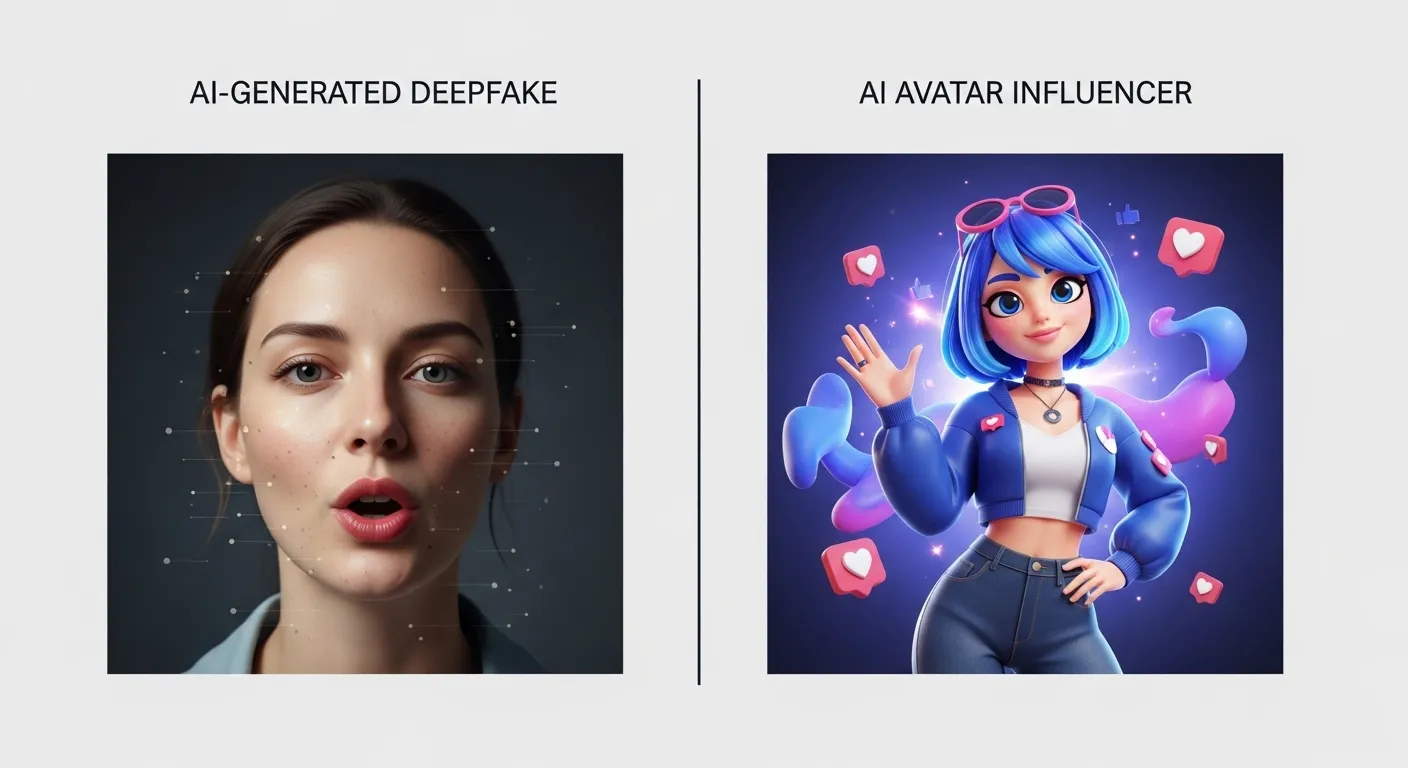

Deepfakes: The Digital Doppelgängers

Deepfakes are synthetic media—usually videos or audio—generated by AI to mimic real people. They’re powered by Generative Adversarial Networks (GANs), which basically involve two AI models playing a high-stakes game of “Who can fake it better?”

One model generates content, the other critiques it until the result is nearly indistinguishable from reality. Add in some voice cloning and motion capture, and boom—you’ve got someone saying or doing something they never actually said or did.

AI Avatars: Real-ish, But Not Quite

AI avatars are digital characters, sometimes modeled on real people, sometimes entirely fictional. These avatars can be animated, voiced, and even trained to behave like real influencers.

Case in point? Lil Miquela, a CGI influencer with millions of followers and brand deals galore. Then there’s Bruce Lee, brought back to “life” for a whiskey commercial in 2023. (Spoiler: He did not actually drink whiskey.)

Where Are They Used in Marketing?

-

Virtual influencers on Instagram, YouTube, and TikTok

-

AI customer service avatars that can “smile” and “nod”

-

Resurrected celebrities promoting everything from cars to canned soup

-

AI-generated testimonials and product reviews (yes, really)

These tools aren’t inherently bad—they can reduce production costs, boost accessibility, and even increase engagement. But without ethical guardrails, it’s the Wild West out there. And not the fun kind with saloons and spittoons.

III. The Ethical Dilemma: Consent, Deception & Manipulation

Now that we know what we’re working with, let’s talk about the dark side—consent, deception, and psychological manipulation.

The Issue of Consent

Let’s say you’re a beloved actor who passed away a decade ago. You can’t say “yes” or “no” to a brand using your face in a new ad. But thanks to deepfake tech, they might do it anyway.

Using likenesses of deceased individuals without consent (or even with sketchy contracts signed decades ago) is ethically murky. Families may object. The public may feel weirded out. And if your digital ghost is selling energy drinks? Yikes.

Worse still are involuntary deepfakes—when someone’s face or voice is used without their permission at all. It’s happening in politics, entertainment, and, yes, marketing. No opt-in. Just exploitation.

Deceptive Practices in Ads

Ethics takes another hit when brands create simulated endorsements without disclosure. Imagine watching a “testimonial” video from a person who isn’t even real—or, worse, a real person who never actually gave it.

We’re also seeing AI avatars used in reviews, testimonials, or how-to videos that pretend to be human. Is it a helpful tutorial… or a synthetic salesperson?

Manipulation and Psychological Targeting

This is where it gets icky.

Deepfake tech enables hyper-personalized content that can tug on heartstrings, guilt-trip you, or mimic authority figures in emotionally manipulative ways. With enough data, an AI could deliver messages in your grandma’s voice saying, “Buy this probiotic, sweetie.” (Chilling, right?)

Then there’s deepfake-powered microtargeting, where synthetic ads adapt in real-time based on your emotions, behavior, or even biometric feedback. It’s not just personalized—it’s weaponized persuasion.

The takeaway? Ethics in digital marketing isn’t just a checkbox—it’s a battlefield of consent, truth, and emotional integrity.

IV. Consumer Trust in the AI Era

Ah, trust—the bedrock of any good brand. But in the age of AI deception, it’s crumbling faster than a stale cookie.

The Decline of Trust

When you can’t tell what’s real, everything becomes suspect. A decline in trust in online content is already here, and it’s especially pronounced among Gen Z and Gen Alpha, who grew up with deepfakes as the norm.

Once bitten by a fake ad or testimonial, consumers become jaded. Brand loyalty? Out the window.

Transparency Matters

Studies show that brands that disclose their AI use—loudly and proudly—fare better in the long run. Transparency builds loyalty, even if the truth is “we used AI to make this ad cheaper.” It’s still honesty.

On the flip side, sneakiness backfires. One brand (which we won’t name, but rhymes with “Bopple”) used AI-generated reviews and got roasted online. The backlash was swift, meme-worthy, and financially painful.

Ethics = Competitive Advantage

Being transparent, human, and ethical isn’t just morally right—it’s smart business. Ethical branding can restore and even strengthen consumer trust and AI doesn’t have to be the enemy. But if you hide your synthetic tricks, don’t be surprised when customers ghost you like a bad Tinder date.

V. Laws, Regulations, and Grey Zones

Now, let’s wade into the legal swamp. Spoiler: It’s murky.

What Laws Exist?

-

EU AI Act: The EU is ahead of the game, requiring disclosure of synthetic content and banning high-risk uses.

-

U.S. Deepfake Laws: A patchwork at best. A few states (like California and Texas) have passed laws, but there’s no comprehensive federal regulation.

-

China’s Deep Synthesis Law: Strong disclosure requirements for AI-generated content, especially in media and e-commerce.

So, there are rules—but enforcement is spotty, and definitions are vague.

Disclosure Mandates: Toothless or Terrific?

Some platforms suggest labeling AI content, but do they enforce it? Rarely.

Without clear penalties, bad actors can run wild. “This ad was generated by AI” often appears in 2-point font—or not at all.

Grey Areas That Make Ethics Murky

-

Satire vs Misinformation: Deepfake comedy is legal. Deepfake news? Not so much. But what if it’s both?

-

Fictional AI Influencers: If no human is being impersonated, is disclosure still required?

-

Platforms: Meta, YouTube, and TikTok have varying policies—but again, enforcement is inconsistent.

Conclusion? The law is still playing catch-up. Until it does, the ethical ball is in your court.

[Continued in next message]

Continuing from Section VI:

VI. Guidelines for Ethical AI Marketing

Let’s get practical. If you’re a marketer dabbling in synthetic content (or thinking about it), here’s your ethical north star. Responsible AI marketing isn’t rocket science—it just takes a little forethought, honesty, and a functioning moral compass.

1. Transparency: Shine a Light on the Synthetic

This is the golden rule: Always disclose AI-generated content.

-

Label your AI avatars.

-

Add disclaimers like “This testimonial was created using AI.”

-

If your spokesperson isn’t real, say it. Loudly. Clearly.

Think of it like food labels—people want to know what’s in the content they’re consuming.

2. Consent & Licensing: Get That “Yes”

If you’re using someone’s likeness, voice, or signature eyebrow twitch, get permission. Period.

-

For living people: use licensing agreements.

-

For deceased celebrities: work with estates or representatives.

-

For voice cloning: consent is non-negotiable.

Even better? Consider royalties for deepfake usage. That way, it’s not just ethical—it’s fair.

3. Authenticity and Integrity: Don’t Be Creepy

AI marketing should enhance, not manipulate.

-

Don’t create emotionally charged stories designed to deceive.

-

Avoid simulating authority (“As a doctor, I recommend…” from a digital rando).

-

Don’t make up quotes, testimonials, or backstories.

If your content wouldn’t pass the “Grandma Test” (“Would I want my grandma to see this and believe it?”), it needs work.

4. Explainability: Pull Back the Curtain

Audiences deserve to understand how your content was made.

-

Include “behind-the-scenes” disclosures or explainers.

-

Say “This ad was created using Synthesia AI” or “The voice in this podcast is synthetic.”

You can even link to an Ethical AI Statement on your site. Transparency = trust.

5. Frameworks to Follow

You’re not alone. Use guidelines from:

-

IEEE: Offers transparency and accountability models

-

AI4People: Focuses on ethical AI in society

-

Partnership on AI: Great for commercial standards

Ethics isn’t a marketing buzzword. It’s your brand’s armor in a world of deepfake chaos.

VII. Brand Case Studies: The Good, The Bad & The Deepfaked

Let’s bring all this theory to life with real (and not-so-real) brand stories. Here’s who’s nailing it—and who got nailed.

✅ The Good: Synthesia & Corporate Training

Synthesia, a platform for AI-generated video training, gets an A+ in ethical AI marketing.

-

They clearly label their synthetic avatars.

-

They don’t pretend their avatars are human.

-

Their use case (internal training) is non-deceptive and low-stakes.

Their success shows that brand safety in AI doesn’t have to kill innovation. It just means doing the right thing.

🚩 The Bad: Scam Ads Using Deepfake Celebrities

Ever seen Tom Hanks sell dental insurance on YouTube? He didn’t. But someone used a deepfake of him to scam viewers into shady products.

These fake endorsements erode public trust—and the real celebrities often have to do damage control. Deepfake regulations are still catching up, so scammers get away with it more often than they should.

Even legit brands have been caught using AI-generated testimonials without disclosure. And guess what? Consumers notice.

🤖 The “Wait, Is That Legal?”: AI Influencers

Virtual influencers like Lil Miquela and Shudu have millions of followers. They land partnerships with fashion and tech brands. They tweet. They vlog. They have feuds.

But they don’t exist.

Is it ethical? If the influencer is clearly labeled synthetic and isn’t impersonating anyone real—maybe. But problems arise when their “lifestyle” starts influencing real buying decisions without disclosing that everything—from their likes to their lipstick—is programmed.

What We’ve Learned

These case studies reveal one big truth: The line between ethical and unethical AI use in marketing is razor-thin—and constantly moving.

Brands that lead with clarity, consent, and integrity? They win long-term.

VIII. The Future of AI in Marketing: Ethics by Design

We’re not just reacting to AI anymore—we’re designing with it. So let’s make sure the ethics come baked in.

Reactive vs. Proactive: Don’t Wait for a PR Disaster

Most brands only think about ethics after a scandal. By then, the damage is done.

Ethics by design means asking tough questions before you hit “publish.”

-

Is this content honest?

-

Would disclosure change how someone views it?

-

Are we using AI to trick or to help?

Design your marketing campaigns with guardrails in place—not band-aids afterward.

Marketers as Gatekeepers of Digital Truth

You’re not “just a marketer” anymore. You’re a truth curator. A guardian of brand authenticity.

AI isn’t evil—it’s a tool. But tools need guidelines. Hammers can build homes or break bones. What matters is how you use them.

AI for Good: Accessibility, Diversity & Inclusion

Synthetic content can be amazing:

-

Translating messages into 50 languages instantly

-

Creating avatars that represent underrepresented identities

-

Making content more accessible for the visually or hearing impaired

These are wins. But even here, ethics matters. Don’t tokenize. Don’t pretend. Be real—even if your avatar isn’t.

Gen Z & Gen Alpha Demand It

Today’s youngest consumers are savvy. They can spot AI-generated nonsense from a mile away. But they’ll reward brands that keep it real, call out fakery, and lead with values.

If your ethical compass is broken, they’ll unfollow and call you out on TikTok. Swiftly. Mercilessly.

IX. Final Thoughts: Building Trust in the Age of Synthetic Media

So, where does this leave us?

We’re entering a bold, bizarre, and sometimes beautiful new era of AI in marketing. Deepfakes and AI avatars aren’t going away—in fact, they’re becoming mainstream. But with great power comes great ethical responsibility (thanks, Uncle Ben).

Let’s recap:

-

Digital marketing ethics is more than a trend—it’s a survival skill.

-

Deepfakes in advertising can be fun or frightening depending on how you use them.

-

AI avatars in marketing must come with transparency, consent, and integrity.

-

Brands that win consumer trust will disclose, explain, and respect their audience.

Don’t fear AI—just be smarter (and kinder) with it. Audit your AI content. Create an ethics checklist. Be the brand that uses tech not to manipulate, but to connect.

Because in the end, it’s not about synthetic media—it’s about real trust.

🙋♀️ FAQ: Everything You’re Too Polite (or Terrified) to Ask About AI, Deepfakes, and Digital Marketing Ethics

🤖 Q1: Are deepfakes always bad in advertising? Or can they be cool sometimes?

A: Great question—and not just because you’re letting me rant.

No, deepfakes aren’t inherently evil. They’re like drones: they can shoot wedding videos… or start wars. It’s all about how you use them.

Used transparently, deepfakes can make training videos more engaging, personalize marketing, or bring history to life (hello, deepfaked Shakespeare explaining ChatGPT). But used without consent or disclosure, they can slide straight into digital manipulation or even fraud. No one wants to see a deepfaked Morgan Freeman selling toenail cream unless Morgan signed off on it and got royalties.

TL;DR: Deepfakes in advertising can be cool—if you’re honest, get consent, and don’t use Tom Hanks’ face without calling his lawyer first.

👩⚖️ Q2: Is it illegal to use a celebrity’s face in an AI-generated ad? Even if it’s funny?

A: Imagine this: You deepfake Ryan Reynolds into an ad for your kombucha company. It goes viral. People laugh. Ryan’s legal team doesn’t.

Even if it’s funny (especially if it’s funny and successful), it’s still likely a violation of publicity rights. That’s a fancy way of saying: “You used my face to make money, now pay me.”

Also, using someone’s face—even in jest—can lead to brand backlash if it crosses the line into impersonation, deception, or just plain weirdness. Ethical use of AI in marketing means respecting people’s image rights—even if the deepfake is just “a little joke.”

Pro tip: Comedy doesn’t protect you from lawsuits or karma. Or Twitter mobs.

😬 Q3: What if I use an AI avatar that’s not based on a real person? Do I still need to disclose that it’s fake?

A: Technically? Not always. But ethically? Heck yes.

Even if your AI avatar is a fully fictional creation named “Daniella.exe” who loves smoothies and productivity hacks, if she’s designed to look and sound like a real human, your audience deserves to know she’s digital.

Why? Because trust and transparency matter. When people find out that your friendly tutorial host isn’t a human but a cleverly rendered computer character, they might feel misled. That’s how trust erosion starts.

So yes, disclosure is still the ethical high ground. Label your synthetic friend as such. “Hi, I’m Daniella, your AI guide to better budgeting!” = instant clarity, and bonus points for honesty.

🤔 Q4: Why are people even using AI avatars and deepfakes in marketing? Is it just for the hype?

A: Oh sweet reader, gather ‘round.

Marketers use AI avatars and deepfakes for all sorts of reasons—some brilliant, some… budget-driven:

-

💰 Cost savings: No need for reshoots, talent fees, or lighting nightmares.

-

⏱️ Speed: AI content can be created faster than you can say “pivot to video.”

-

🌍 Localization: Want your ad in 15 languages with lip-sync? Boom—done.

-

📈 Scalability: Produce a hundred product videos in a day? No problem, robot friends got you.

But yes, sometimes it’s also about the hype. “Look, our campaign is AI-powered!” often replaces “We have a strategy.” (Spoiler: They don’t.)

Just remember: Just because you can doesn’t mean you should. Ethics, people. Ethics.

😱 Q5: What’s the worst-case scenario with deepfakes in advertising? Like, how bad can it get?

A: Buckle up, friend.

Worst-case scenario? You fake a celebrity endorsement → it goes viral → the celeb sues → your brand becomes a meme → public trust nosedives → your interns flee → your investors weep → you become a case study in marketing classes titled “How to Burn Brand Equity in One Click.”

Also: Regulatory bodies (like the FTC in the U.S.) are starting to crack down. Deceptive advertising is punishable, deepfakes or not.

If your brand becomes synonymous with manipulation, no clever AI will save you from consumer wrath. Just ask any brand that’s been caught using fake testimonials or CGI influencers without disclosure. Twitter (or Threads?) will have your head.

💡 Q6: Okay, but what does “disclosure” actually look like? Is a footnote enough?

A: Ha! Cute.

“Disclosure” isn’t hiding fine print in a corner like your ex hiding their DMs. It’s clear, visible, and understandable to regular people—not just lawyers or software engineers.

Try:

-

🎯 A short note in the video: “This content was generated using AI.”

-

🧠 A label under the avatar: “This is a synthetic spokesperson created with [tool].”

-

🔗 A landing page with an “AI Transparency Statement.”

Don’t bury it in your privacy policy. If people have to squint or scroll, it doesn’t count.

🧓 Q7: How do Gen Z and Gen Alpha feel about AI marketing? Do they even care?

A: Oh, they care.

You might think Gen Z and Gen Alpha, raised on TikTok filters and AI art, wouldn’t bat an eye at synthetic marketing. But plot twist: they value authenticity more than any previous generation.

They don’t mind if you use AI—but they want you to admit it. Fake it and hide it? You’re canceled. Use it ethically and openly? You’re the cool, honest brand they’ll tell their friends about.

They also care deeply about diversity, ethics, and transparency. So using AI to “virtue signal” without real values behind it? Yeah, they’ll call you out—on TikTok, in memes, and with receipts.

💀 Q8: What about using dead celebrities in ads? Creepy or clever?

A: You’re asking all the spicy ones.

Resurrecting deceased celebrities using deepfakes is… complicated. It walks a razor-thin ethical line between nostalgia and necromarketing.

Examples:

-

Audrey Hepburn in a chocolate ad? Kinda sweet.

-

Bruce Lee selling whiskey? Dubious.

-

Albert Einstein dancing in a crypto ad? Please don’t.

Even if estates give permission, ask yourself: Would the person have wanted this? Are you using their image respectfully—or just cashing in?

If it feels more like a séance than a campaign strategy, maybe rethink it.

🧠 Q9: Are there frameworks or guidelines marketers can follow to stay ethical? Or is it just vibes and guesswork?

A: Great news—it’s not just vibes (though those help too).

There are several legit frameworks to help marketers navigate this brave new synthetic world:

-

🧭 IEEE’s Ethically Aligned Design – focuses on transparency, accountability, and respect for human dignity.

-

🧬 AI4People – sets out principles for responsible AI in society.

-

🤝 Partnership on AI – offers best practices for synthetic media and disclosures.

-

⚖️ FTC Guidelines – especially relevant for endorsements and truth in advertising.

Use them. Learn them. Stick ’em on your digital fridge. They’re your ethical GPS.

🛠️ Q10: What can I do today to make my AI marketing more ethical? Like, real talk.

A: Love that energy. Here’s your quick-start ethical marketing checklist—now with 200% more common sense:

-

✅ Audit your AI content – What’s synthetic? What’s real? What needs labeling?

-

✅ Get consent – From real humans, estates, and creators.

-

✅ Be transparent – Label everything clearly. Bonus points for humor and clarity.

-

✅ Avoid emotional manipulation – If your AI ad could make someone cry into their cereal bowl, rethink it.

-

✅ Respect culture & identity – Don’t use AI avatars to mimic races, genders, or communities you don’t represent authentically.

-

✅ Educate your team – Don’t let Barry from IT make AI avatars without ethics training.

-

✅ Plan for backlash – What’s your plan if something goes viral for the wrong reasons?

You got this.

👋 Q11: Last one—Is it possible to build trust and use AI in marketing? Or do I have to pick one?

A: You can absolutely have both. It’s not “trust OR AI”—it’s “trust THROUGH AI.”

Using synthetic content with clarity, creativity, and care? That’s called being a responsible AI marketer. It’s the future, and you’re not just invited—you’re essential.

In fact, brands that lead with transparency, disclosure, and ethical storytelling will win in a world that’s getting increasingly skeptical of digital fakery.

Be bold. Be smart. Be honest.

And please—don’t deepfake Oprah without asking.

Learn beautiful Animations in powerpoint – https://www.youtube.com/playlist?list=PLqx6PmnTc2qjX0JdZb1VTemUgelA4QPB3

Learn Excel Skills – https://www.youtube.com/playlist?list=PLqx6PmnTc2qhlSadfnpS65ZUqV8nueAHU

Learn Microsoft Word Skills – https://www.youtube.com/playlist?list=PLqx6PmnTc2qib1EkGMqFtVs5aV_gayTHN